Introduction to video analytics and regulations

With the rapid advancement of AI systems, the technical possibilities in the field of video analytics (VA) are expanding. Alongside progress in applications for operational efficiency as well as for security and safety, M2P is closely monitoring the legal landscape ensuring data protection.

To establish regulations for AI systems at EU-wide level, the European Parliament is currently engaged in a debate on the implementation of the Artificial Intelligence Act (AIA). In this context, we aim to provide answers to two key questions in this article:

- What is the Artificial Intelligence Act (AIA)?

- How does the AIA impact video analytics use cases?

This article is designed with a specific focus on fundamental legal aspects of video analytics. It should be noted that certain applications such as those in medicine or autonomous driving are not covered here. It’s also important to acknowledge that this is a preliminary law under review and may not cover all aspects.

Regulation of data processing systems in the EU: Current legal landscape

In the European Union, the handling of personal data in video analytics systems is governed by Chapter II (Articles 5 – 11) of the General Data Protection Regulation (GDPR)[7]. Enacted in 2016 and enforced since May 2018, the GDPR provides the foundational framework for data protection within the EU. Nevertheless, it lacks comprehensive guidelines for addressing the technical, ethical, and data protection challenges, as well as transparency requirements associated with artificial intelligence.

Regulations of AI systems through the new law

For those reasons mentioned above, the European Union is currently in the process of crafting a new legislative proposal known as the Artificial Intelligence Act (AIA). Its full title is the “Proposal for a Regulation of the European Parliament and of the Council laying down harmonized rules on artificial intelligence and amending certain Union legislative acts”[1]. The proposal received overwhelming support and was adopted with 499 “yes” votes, 93 abstentions, and 28 “no” votes during the first reading before the European Parliament on June 14, 2023[2].

As part of its broader digital strategy, the EU plans to regulate artificial intelligence (AI) to facilitate the development and use of this innovative technology under improved conditions. It is emphasized in the draft that AI “can bring a wide array of economic and societal benefits across the entire spectrum of industries and social activities”[1].

The European Parliament’s interpretation of AI

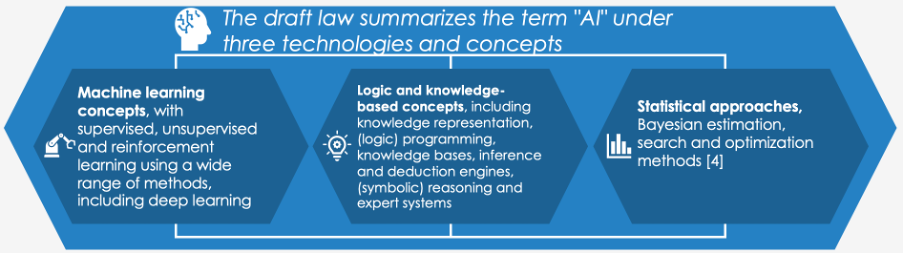

The European Parliament defines its understanding of AI using three key concepts. The definition provided in Annex I of the AIA[4] is broad, encompassing systems based on machine learning, logic, or statistics, as illustrated in Figure 1.

The EU’s declared goal is to ensure that AI systems are secure, transparent, traceable, unbiased, and environmentally conscious. Rather than being entirely automated, AI systems should remain under human control to prevent potential harm[3]. In practical terms, the current version of the law would impose limitations on the use of e.g., facial recognition software[2]. Furthermore, systems that are considered moderately high-risk in nature but are not unsafe enough to warrant an outright ban, will face more stringent regulations. For instance, operators of AI applications like OpenAI, Inc. (which runs ChatGPT) would need to provide more details about their training data and implement additional measures to limit text generation related to illegal content[6].

Scope of application and affected stakeholders

The AIA establishes a clear division between providers and users of AI systems – providers include all legal entities, authorities, institutions, or other parties engaged in either developing an AI system or supervising its development. These regulations become applicable as soon as the AI is deployed within the Union, irrespective of whether the involved parties are located or reside in a third country[5].

The new law classifies AI-systems in four risk categories

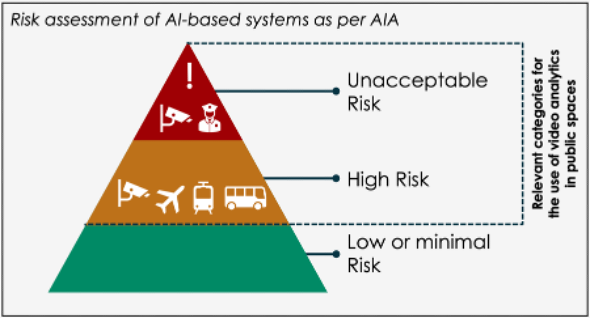

Moreover, the Artificial Intelligence Act classifies systems utilizing AI based on their level of risk. The specific regulations depend upon a system’s assigned category. Section 5.2.2 outlines three applicable risk levels: “Unacceptable risk”, “high risk”, and “low or minimal risk” (see Figure 2). Notably, the first two categories are relevant for camera systems used in the wider transportation industry, particularly those deployed in critical infrastructures such as airports. The following paragraph compares the categorization of systems with the two most severe levels of risk (“unacceptable” and “high”).

As per Article 5, AI systems pose an unacceptable risk when the level of risk is so severe that they are outright prohibited. This category includes, for example, so-called “social scoring systems”. Further, “the use of ‘real time’ remote biometric identification systems in publicly accessible spaces for the purpose of law enforcement is also prohibited”[1] with a limited number of exceptional cases. Exceptions may be granted, for example, if the identification occurs only after a significant amount of time, is used to prosecute serious crimes, and is authorized by a judge[3].

According to Article 6[1], Annex II & III[4], AI systems are classified as high-risk if they meet the following two conditions:

- AI systems deployed in products captured under EU product safety regulations. These include aviation products, vehicles, medical devices, or elevators;

- AI systems that must be registered in an EU database.

Annex III of the draft law clearly specifies the following applications categorized as high-risk AI systems:

- Biometric identification and categorisation of natural persons:

- AI systems intended to be used for the ‘real-time’ and ‘post’ remote biometric identification of natural persons.

- Management and operation of critical infrastructures:

- AI systems intended to be used as safety components in the management and operation of road traffic and the supply of water, gas, heating and electricity[4].

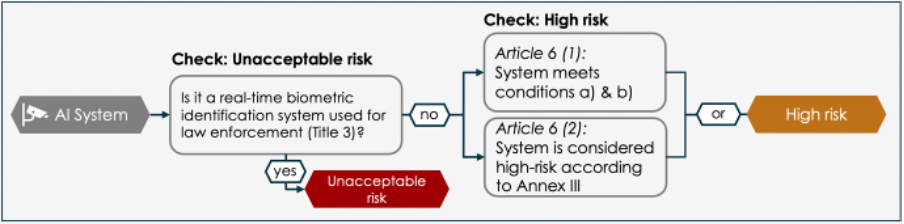

Risk classification of an AI system using the example of video analytics

Below is a visual representation outlining the classification of an AI system for video analytics use cases as per the AIA draft law (see Figure 3). To provide more clarity, the following terms are quoted from the AIA:

- ‘Remote biometric identification system’ means an AI system for the purpose of identifying natural persons at a distance through the comparison of a person’s biometric data with the biometric data contained in a reference database […];

- ‘Law enforcement’ means activities carried out by law enforcement authorities for the prevention, investigation, detection or prosecution of criminal offences or the execution of criminal penalties, including the safeguarding against and the prevention of threats to public security;

- The conditions in Article 6 (1) for classification as a high-risk system are as follows:

- the AI system is intended to be used as a safety component of a product, or is itself a product, covered by the Union harmonisation legislation listed in Annex II;

- the product whose safety component is the AI system, or the AI system itself as a product, is required to undergo a third-party conformity assessment with a view to the placing on the market or putting into service of that product pursuant to the Union harmonisation legislation listed in Annex II[4].

Summary and conclusion on video analytics use cases under the AIA draft law

In summary, the current draft law suggests that the use of video analytics with artificial intelligence is potentially viable, but subject to stringent regulations. Given that video analytics systems from companies in the field of travel, transportation and logistics are predominantly deployed in publicly accessible spaces, and often in critical infrastructures, they are likely to be classified as high-risk systems at the very least. To maintain compliance with GDPR and AIA standards, it is imperative to comply with the stipulated requirements for high-risk AI systems.

Next steps to finalize the draft law: Political process

Despite a successful vote in European Parliament, the law still has a long journey ahead before it is passed. In the EU, a process known as trilogues will occur, involving discussions between legislators, Commission officials, and representatives from member states. It is likely that the current version of the Artificial Intelligence Act, which has faced resistance from major tech companies due to its extensive scope of application, will undergo significant amendments before it reaches final adoption. M2P will closely monitor the debates in the European Parliament to provide informed advice on matters pertaining to the regulation of AI in camera systems and to derive implications for the use of artificial intelligence in such systems.

Speak to an expert

Sources

[1] https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=celex%3A52021PC0206 – (01/10/2023)

[3] https://www.europarl.europa.eu/news/en/headlines/society/20230601STO93804/eu-ai-act-first-regulation-on-artificial-intelligence – (10/10/2023)

[4] https://eur-lex.europa.eu/resource.html?uri=cellar:e0649735-a372-11eb-9585-01aa75ed71a1.0001.02/DOC_2&format=PDF – (18/10/2023)

[5] https://eu-digitalstrategie.de/ai-act/ – (20/10/2023)

[6] https://www.nytimes.com/2023/06/14/technology/europe-ai-regulation.html

[7] https://eur-lex.europa.eu/legal-content/EN/TXT/HTML/?uri=CELEX:32016R0679 – (06/11/2023)